Introduction

The DSpace API was originally created in 2002, very little has changed in the way we work with our API. After working with the API day in day out for over 7 years I felt it was time for a change. In this proposal I will highlight the issues that the current API has and suggest solutions for these issues.

How things are now

Bad distribution of responsibilities

Currently every database object (more or less) corresponds to a single java class. This single java class contains the following logical code blocks:

- CRUD methods (Create & Retrieve methods are static)

- All business logic methods

- Database logic (queries, updating, …)

- All getters & setters of the object

- ….

Working with these types of GOD classes, we get the following disadvantages:

- By grouping all of these different functionality's into a single class it is sometimes hard (impossible ?) to see which methods are setters/getters/business logic since everything is thrown onto a big pile.

- Makes it very hard to make/track local changes since you need to overwrite the entire class to make a small change.

- Refactoring/altering GOD classes becomes hard since it is unclear where the database logic begins & where the business logic is located.

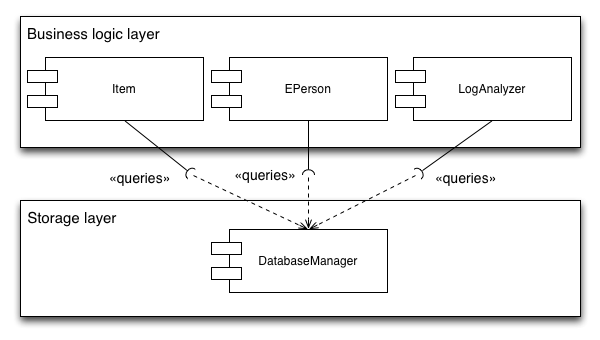

Database layer access

- EPerson table is queried from the Groomer and EPerson class.

- Item is queried from the LogAnalyser, Item, EPerson classes

This makes it hard to make changes to a database table since it is unclear from which classes the queries are coming from. Below is a schematic overview of the usage of the item class:

Postgres/oracle support

String params = "%"+query.toLowerCase()+"%";

StringBuffer queryBuf = new StringBuffer();

queryBuf.append("select e.* from eperson e " +

" LEFT JOIN metadatavalue fn on (resource_id=e.eperson_id AND fn.resource_type_id=? and fn.metadata_field_id=?) " +

" LEFT JOIN metadatavalue ln on (ln.resource_id=e.eperson_id AND ln.resource_type_id=? and ln.metadata_field_id=?) " +

" WHERE e.eperson_id = ? OR " +

"LOWER(fn.text_value) LIKE LOWER(?) OR LOWER(ln.text_value) LIKE LOWER(?) OR LOWER(email) LIKE LOWER(?) ORDER BY ");

if(DatabaseManager.isOracle()) {

queryBuf.append(" dbms_lob.substr(ln.text_value), dbms_lob.substr(fn.text_value) ASC");

}else{

queryBuf.append(" ln.text_value, fn.text_value ASC");

}

// Add offset and limit restrictions - Oracle requires special code

if (DatabaseManager.isOracle())

{

// First prepare the query to generate row numbers

if (limit > 0 || offset > 0)

{

queryBuf.insert(0, "SELECT /*+ FIRST_ROWS(n) */ rec.*, ROWNUM rnum FROM (");

queryBuf.append(") ");

}

// Restrict the number of rows returned based on the limit

if (limit > 0)

{

queryBuf.append("rec WHERE rownum<=? ");

// If we also have an offset, then convert the limit into the maximum row number

if (offset > 0)

{

limit += offset;

}

}

// Return only the records after the specified offset (row number)

if (offset > 0)

{

queryBuf.insert(0, "SELECT * FROM (");

queryBuf.append(") WHERE rnum>?");

}

}

else

{

if (limit > 0)

{

queryBuf.append(" LIMIT ? ");

}

if (offset > 0)

{

queryBuf.append(" OFFSET ? ");

}

}

Alternate implementation of static flows

// Should we send a workflow alert email or not?

if (ConfigurationManager.getProperty("workflow", "workflow.framework").equals("xmlworkflow")) {

if (useWorkflowSendEmail) {

XmlWorkflowManager.start(c, wi);

} else {

XmlWorkflowManager.startWithoutNotify(c, wi);

}

} else {

if (useWorkflowSendEmail) {

WorkflowManager.start(c, wi);

}

else

{

WorkflowManager.startWithoutNotify(c, wi);

}

}

if(ConfigurationManager.getProperty("workflow","workflow.framework").equals("xmlworkflow")){

// Remove any xml_WorkflowItems

XmlWorkflowItem[] xmlWfarray = XmlWorkflowItem

.findByCollection(ourContext, this);

for (XmlWorkflowItem aXmlWfarray : xmlWfarray) {

// remove the workflowitem first, then the item

Item myItem = aXmlWfarray.getItem();

aXmlWfarray.deleteWrapper();

myItem.delete();

}

}else{

// Remove any WorkflowItems

WorkflowItem[] wfarray = WorkflowItem

.findByCollection(ourContext, this);

for (WorkflowItem aWfarray : wfarray) {

// remove the workflowitem first, then the item

Item myItem = aWfarray.getItem();

aWfarray.deleteWrapper();

myItem.delete();

}

}

Local modifications

Take for example the WorkflowManager, this class consist of only static methods, so if a developer for an institution wants to alter one method the entire class needs to be overridden in the additions module. When the Dspace needs to be upgraded to a new version it is very hard to locate the changes in a class that is sometimes thousands of lines long.

Inefficient caching mechanism

DSpace caches all DSpaceObjects that are retrieved within a single lifespan of a context object. The cache is only cleared when the context is closed (but this is coupled with a database commit/abort) or when explicitly clearing the cache or removing a single item from the cache. When writing large scripts one must always keep in mind the cache, because if the cache isn't cleared DSpace will keep running until an OutOfMemory exception occurs. So contributions by users with a small dataset could lead to hard to detect memory leaks until the feature is tested with a lot of data.

Excessive database querying when retrieving objects

When retrieving a bundle from the database it automatically loads in all bitstreams linked to this bundle, for these bitstreams it retrieves the bitstreamFormat. For example when retrieving all bundles for an item to get the bundle names all files and their respective bitstream formats are loaded into memory. This leads to a lot of obsolete database queries which aren't needed. Improvements have been made on this section but it still requires a lot of coding to "lazy load" these objects.

Service based api

DSpace api structure

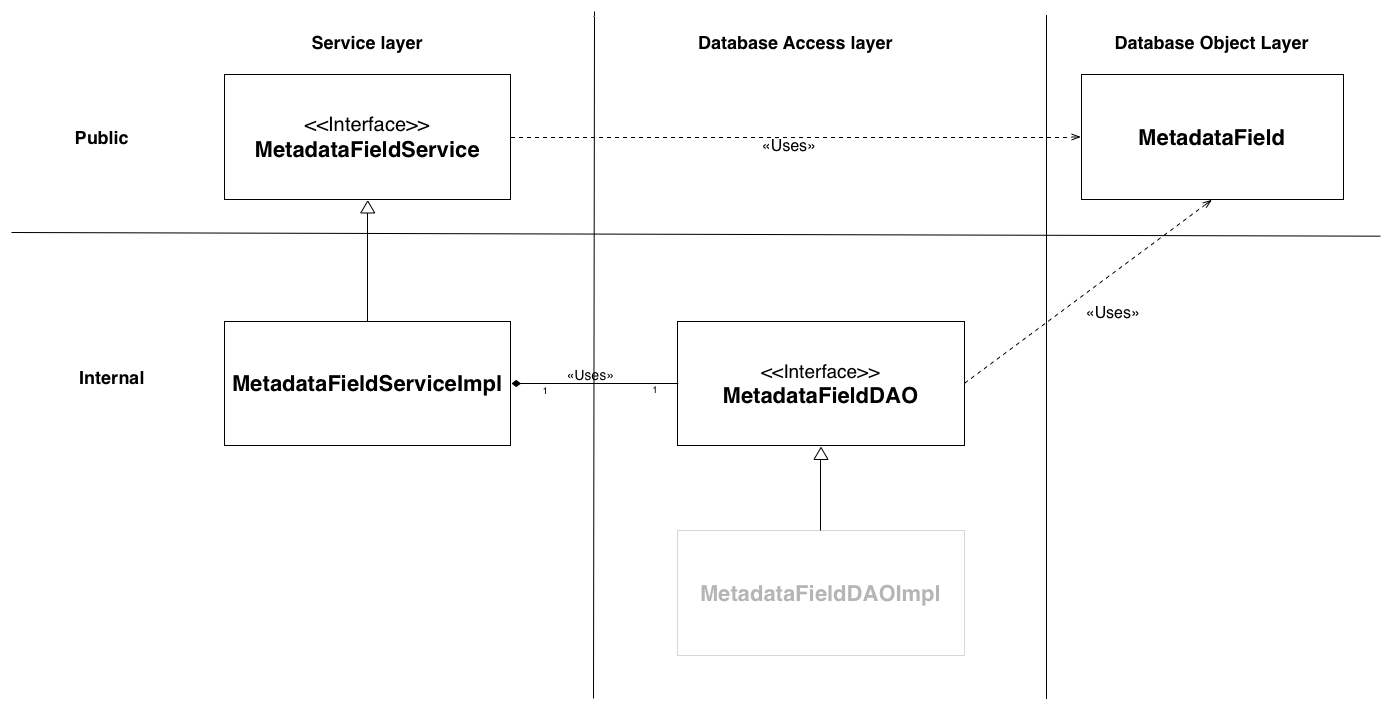

To fix the current problems with the API and make it more flexible and easier to use I propose we split the API in 3 layers:

Service layer

This layer would be our top layer, will be fully public and used by the CLI, JSPUI & XMLUI, .... Every service in this layer should be a stateless singleton interface. The services can be subdivided into 2 categories database based services and business logic block services.

The database based services are used to interact with the database as well as providing business logic methods for the objects. Every table in DSpace requires a single service linked to it. These services will not perform any database queries, it will delegate all database queries to the Database Access Layer discussed below.

The business logic services are replacing the old static DSpace Manager. For example the AuthorizeManager has been replaced with the AuthorizeService which contains the same methods and all the old code except that the AuthorizeService is an interface and a concrete (configurable) implemented class contains all the code. This class can be extended/replaced by another implementation without ever having to refactor the uses of this class.

Database Access Layer

As discussed above to continue working with a static DatabaseManager class that contains if postgres else oracle code will lead to loads of bugs we replace this “static class” by a new layer.

This layer is called the Database Acces Object layer (DAO layer) and no business logic, it's sole responsibility is to provide database access for each table (CRUD (create/retrieve/update/delete) calls). This layer consist entirely of interfaces which are automagically linked to implementations in the layers above (more on this later). The reason for interfaces is quite simple, by using interfaces we can easily replace our entire DAO layer without actually having to alter our service layer.

Each database table has its own DAO interface as opposed to the old DatabaseManager class, this is to support Object specific CRUD methods. Our metadataField DAO interface has a findByElement() method for example while an EPerson DAO interface would require a findByEmail() retrieval method. These methods are then called by the service layer which has similar methods.

The name of each class must end with DAO. A single database table can only be queried from a single DAO instance. A DAO instance cannot be accessed from outside the API and must be used in a single service instance. Linking a DAO to multiple services will result in the messy separation we are trying to create.

Database Object

Each table in the database that is not a linking table (collection2item is a linking table for example) is represented by a database object. This object contains no business logic and has setters/getters for all columns (these may be package protected if business logic is required). Each Data Object has its own DAO interface as opposed to the old DatabaseManager class, this is to support Object specific CRUD methods.

Schematic representation

Below is a schematic representation of how a refactored class would look (using the simple MetadataField class as an example):

The public layers objects are the only objects that can be accessed from other classes, the internal objects represent objects who's only usage is document below. This way the internal usage can change in it entire without ever affecting the DSpace classes that use the api.

Hibernate: the default DAO implementation

- Performance: When retrieving a bundle the bitstreams are loaded into memory, which in turn load in the bitstreamformat, which in turn loads in file extentions, ….. This consists of a lot of database queries. With hibernate you can use lazy loading to only load in certain linked objects at the moment they are requested.

- Effective cross database portability: No need to write “if postgres query X if oracle query Y” anymore, hibernate takes care of all of this for you !

- Developers’ Productivity: Although hibernate has a learning curve, once you get past this linking objects & writing queries takes considerably less time then the old way.

- Improved caching mechanism: The current DSpace caching mechanism throws objects onto a big pile until the pile is full which will result in an OutOfMemory exception. Hibernate auto caches all queries in a single session and even allows for users to configure which tables should be cached across sessions (application wide).

Service based api in DSpace (technical details)

Database Object: Technical details

Each non linking database table in DSpace must be represented by a single class containing getters & setters for the columns. Linked objects can also be represented by getters and setters, below is an example of the database object representation of MetadataField.

@Entity

@Table(name="metadatafieldregistry", schema = "public")

public class MetadataField {

@Id

@Column(name="metadata_field_id")

@GeneratedValue(strategy = GenerationType.AUTO ,generator="metadatafieldregistry_seq")

@SequenceGenerator(name="metadatafieldregistry_seq", sequenceName="metadatafieldregistry_seq", allocationSize = 1)

private Integer id;

@OneToOne(fetch = FetchType.LAZY)

@JoinColumn(name = "metadata_schema_id",nullable = false)

private MetadataSchema metadataSchema;

@Column(name = "element", length = 64)

private String element;

@Column(name = "qualifier", length = 64)

private String qualifier = null;

@Column(name = "scope_note")

@Lob

private String scopeNote;

protected MetadataField()

{

}

/**

* Get the metadata field id.

*

* @return metadata field id

*/

public int getFieldID()

{

return id;

}

/**

* Get the element name.

*

* @return element name

*/

public String getElement()

{

return element;

}

/**

* Set the element name.

*

* @param element new value for element

*/

public void setElement(String element)

{

this.element = element;

}

/**

* Get the qualifier.

*

* @return qualifier

*/

public String getQualifier()

{

return qualifier;

}

/**

* Set the qualifier.

*

* @param qualifier new value for qualifier

*/

public void setQualifier(String qualifier)

{

this.qualifier = qualifier;

}

/**

* Get the scope note.

*

* @return scope note

*/

public String getScopeNote()

{

return scopeNote;

}

/**

* Set the scope note.

*

* @param scopeNote new value for scope note

*/

public void setScopeNote(String scopeNote)

{

this.scopeNote = scopeNote;

}

/**

* Get the schema .

*

* @return schema record

*/

public MetadataSchema getMetadataSchema() {

return metadataSchema;

}

/**

* Set the schema record key.

*

* @param metadataSchema new value for key

*/

public void setMetadataSchema(MetadataSchema metadataSchema) {

this.metadataSchema = metadataSchema;

}

/**

* Return <code>true</code> if <code>other</code> is the same MetadataField

* as this object, <code>false</code> otherwise

*

* @param obj

* object to compare to

*

* @return <code>true</code> if object passed in represents the same

* MetadataField as this object

*/

@Override

public boolean equals(Object obj)

{

if (obj == null)

{

return false;

}

if (getClass() != obj.getClass())

{

return false;

}

final MetadataField other = (MetadataField) obj;

if (this.getFieldID() != other.getFieldID())

{

return false;

}

if (!getMetadataSchema().equals(other.getMetadataSchema()))

{

return false;

}

return true;

}

@Override

public int hashCode()

{

int hash = 7;

hash = 47 * hash + getFieldID();

hash = 47 * hash + getMetadataSchema().getSchemaID();

return hash;

}

public String toString(char separator) {

if (qualifier == null)

{

return getMetadataSchema().getName() + separator + element;

}

else

{

return getMetadataSchema().getName() + separator + element + separator + qualifier;

}

}

@Override

public String toString() {

return toString('_');

}

}